Load Balancing

Loadbalance requests between multiple LLMs

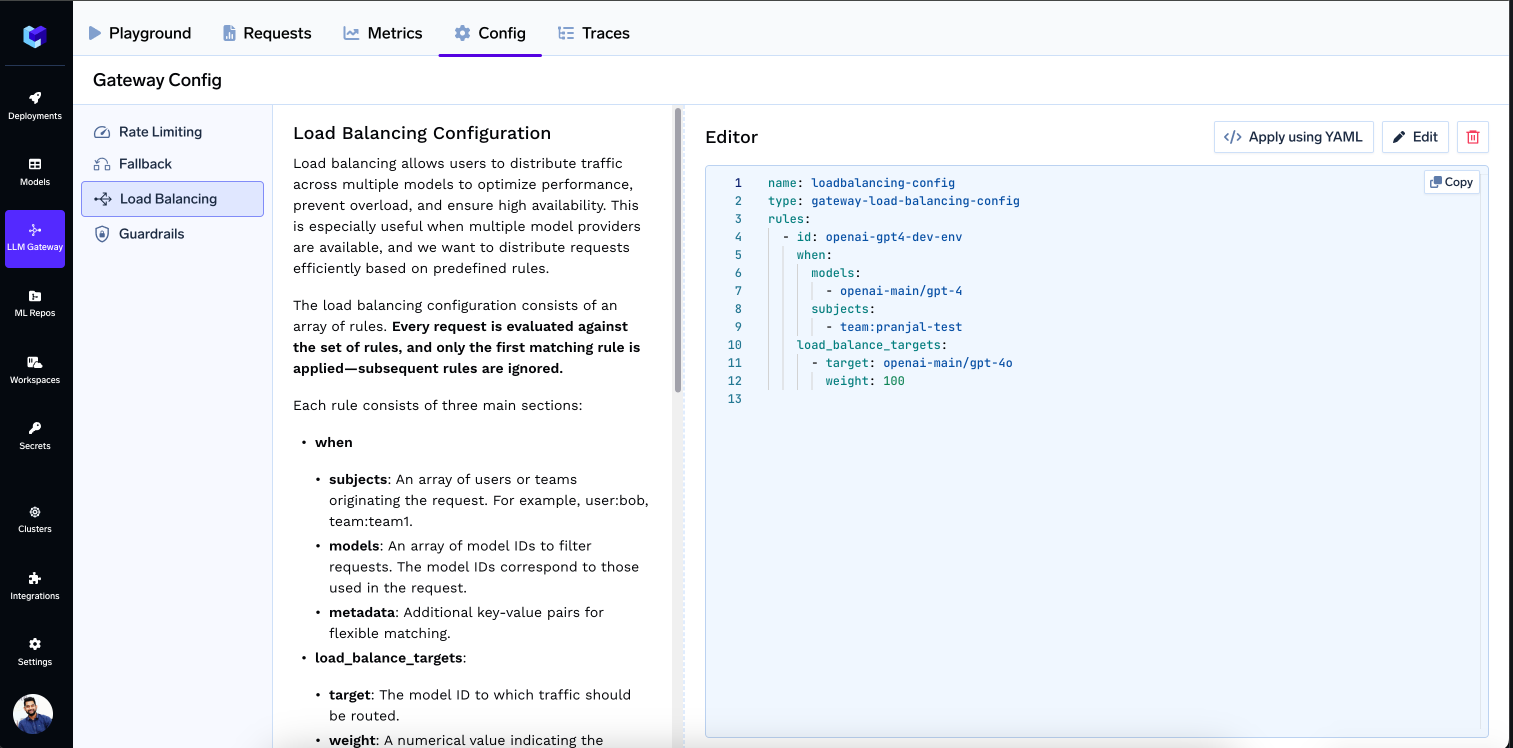

Load balancing allows users to distribute traffic across multiple models to optimize performance, prevent overload, and ensure high availability. This is especially useful when multiple model providers are available, and we want to distribute requests efficiently based on predefined rules.

Rules

The load balancing configuration consists of an array of rules. Every request is evaluated against the set of rules, and only the first matching rule is applied—subsequent rules are ignored.

Each rule consists of five main sections:

- A

configblock (only for latency-based rules) to fine-tune routing behavior.

- Id:

- Unique identifier for a rule

- type:

- Load balancing type, either

weight-based-routingorlatency-based-routing.

- Load balancing type, either

- when:

- subjects: An array of users or teams originating the request. For example, user:bob, team:team1.

- models: An array of model IDs to filter requests. The model IDs correspond to those used in the request.

- metadata: Additional key-value pairs for flexible matching.

- load_balance_targets:

- target: The model ID to which traffic should be routed.

- For

latency-based-routingtargets are selected dynamically based on per-token latency. Routing is done to the lowest-latency targets within a specified margin.

- For

- weight: A numerical value indicating the proportion of traffic that should be routed to the target model. The sum of all weights across different targets should ideally add up to 100, ensuring proper distribution. Not applicable for

latency-based-routingtype - override_params (optional): A key-value object used to modify or extend the request body parameters when forwarding requests to the target model. Each key corresponds to a parameter name, and the associated value specifies what should be sent to the target. This allows you to override existing parameters from the original request or introduce entirely new ones, enabling flexible customization for each target model during load balancing

- target: The model ID to which traffic should be routed.

- config:

- lookback_window_minutes: Defines the time window (in minutes) used to calculate the average latency per token for each model. Accepts values from 1 to 60. Default is 10

- allowed_latency_overhead_percentage: Allows inclusion of models whose latency is within a certain percentage of the best-performing model. For example, if the fastest model has 1s latency and this is set to 50%, any model with latency <= 1.5s will be considered for routing.

- A model must have at least 3 requests in the lookback_window_minutes to be eligible based on latency. If a model has fewer than 3 requests, it is still included in routing to ensure warm-up.

- Once a model reaches 3 or more requests in the lookback window, its actual latency metrics are used for routing decisions.

Model Configs

The load balancing configuration consists of an array of model configs. The model_configs block defines global constraints for individual models. These configurations apply across all load balancing rules and are matched by the model name.

- model: Must exactly match the model name used in truefoundry.

- usage_limits (optional):

- tokens_per_minute (optional): Maximum number of tokens allowed per minute. If this limit is reached, the model will be excluded from all routing decisions.

- requests_per_minute(optional): Maximum number of requests per minute. If exceeded, the model is skipped.

- If not provided, the limit is considered unlimited.

- failure_tolerance (optional):

- allowed_failures_per_minute: Number of allowed failures per minute.

- cooldown_period_minutes: How long (in minutes) the model is marked unhealthy after hitting failure limits.

- If

failure_toleranceis not defined, failure detection is disabled. If defined, both fields are required.

Let's say you want to setup load balancing based on following rules:

- Distribute traffic for the gpt4 model from the openai-main account for user [email protected] with 70% routed to azure-gpt4 and 30% to openai-main-gpt4. The Azure target also overrides a few request parameters like temperature, max_tokens, and top_p.

- Distribute traffic for the llama3 model from Bedrock for customer1 with 60% routed to azure-bedrock and 40% to aws-bedrock.

- Use latency-based routing between two claude models for internal evaluation use cases, preferring the model with the lowest average token latency in the last 10 minutes, and allowing fallback within 25% latency margin.

Your load balancing config would look like this:

name: loadbalancing-config

type: gateway-load-balancing-config

model_configs:

- model: "azure/gpt4"

usage_limits:

tokens_per_minute: 50000

requests_per_minute: 100

failure_tolerance:

allowed_failures_per_minute: 3

cooldown_period_minutes: 5

- model: "anthropic/claude-3-sonnet"

usage_limits:

tokens_per_minute: 60000

requests_per_minute: 120

failure_tolerance:

allowed_failures_per_minute: 2

cooldown_period_minutes: 4

# The rules are evaluated in order. Once a request matches one rule,

# the subsequent rules are not checked.

rules:

# Distribute traffic for gpt4 model from openai-main account for user:bob with 70% to azure-gpt4 and 30% to openai-main-gpt4. The azure target also overrides a few request parameters like temperature and max_tokens

- id: "openai-gpt4-dev-env"

type: weight-based-routing

when:

subjects: ["user:[email protected]"]

models: ["openai-main/gpt4"]

metadata:

env: dev

load_balance_targets:

- target: "azure/gpt4"

weight: 70

override_params:

temperature: 0.5

max_tokens: 800

top_p: 0.9

- target: "openai-main/gpt4"

weight: 30

# Distribute traffic for llama model from bedrock for customer1 with 60% to azure-bedrock and 40% to aws-bedrock

- id: "llama-bedrock-customer1"

type: weight-based-routing

when:

models: ["bedrock/llama3"]

metadata:

customer-id: customer1

load_balance_targets:

- target: "azure/bedrock-llama3"

weight: 60

- target: "aws/bedrock-llama3"

weight: 40

# Latency-based routing between two Claude models for internal eval traffic, allowing fallback within 25% of best latency

- id: "latency-based-claude-3"

type: latency-based-routing

when:

models: ["anthropic/claude-3"]

metadata:

env: internal

config:

lookback_window_minutes: 10

allowed_latency_overhead_percentage: 25

load_balance_targets:

- target: "anthropic/claude-3-opus"

- target: "anthropic/claude-3-sonnet"Configure Load Balancing on Gateway

It's straightforward—simply go to the Config tab in the Gateway, add your configuration, and save.

Updated 2 days ago