Self-hosted Models

TrueFoundry offers a solution to deploy open-source or private models and integrate them directly into the Gateway. This capability also extends to fine-tuned models and embedding models.

Refer to our docs on Deploying LLMs From Model Catalog for how to deploy LLMs from our model catalogue in a few clicks.

Tryout Self-hosted Model

Follow these steps once you have your model deployed:

Save costs on Self-hosted Model

GPUs are costly, and running GPU-powered deployments can quickly drive up expenses. TrueFoundry offers built-in solutions to help reduce deployment costs.

You can choose from two cost-saving options:

- Auto-shutdown based on inactivity: Set up automatic shutdown of deployment pods if no new requests are received within a specified time frame.

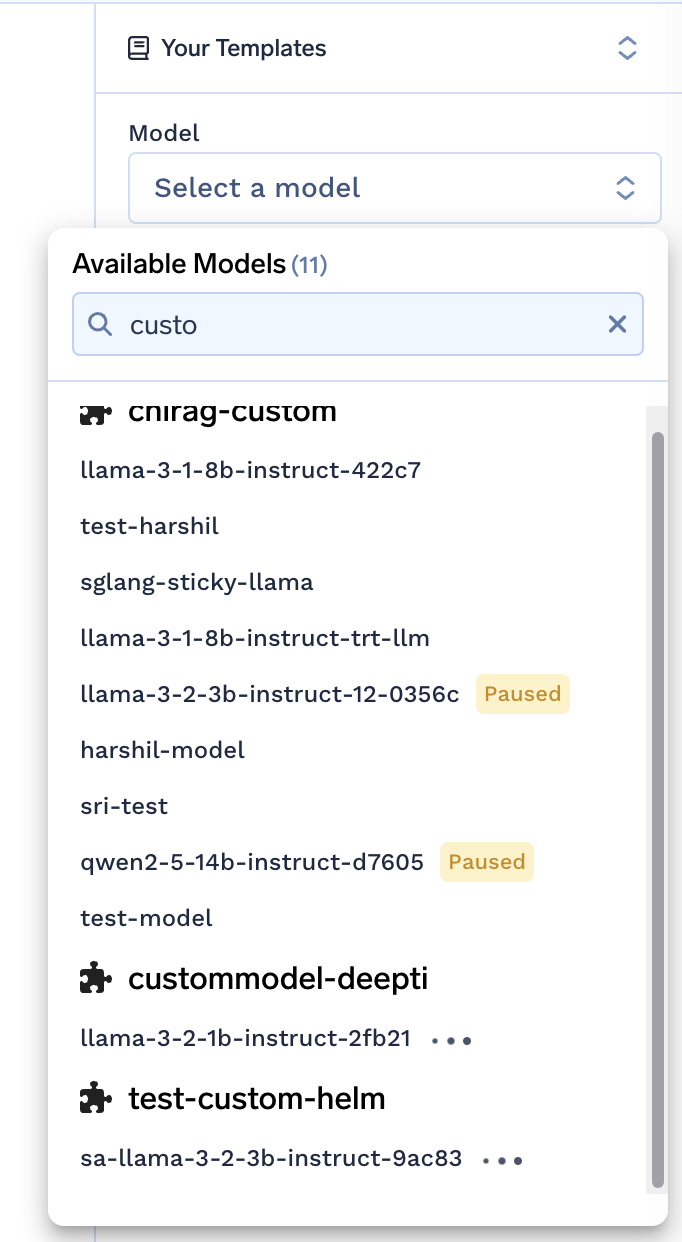

- Manual pause/resume: You can manually pause deployments that you anticipate won't be used for a certain period.

In both scenarios, the model status will be displayed as "paused" on the Gateway, as shown below:

Updated 21 days ago