Metadata

Metadata provides a way to add additional context to your AI requests. It acts as a way to tag your requests with relevant business context, offering several key benefits:

- Monitor usage across various users, teams, environments, or even features.

- Configure rate limit, fallback, and load balance based on metadata.

- Filter logs to identify specific types of requests.

- Analyze usage patterns to gain insights into AI interactions.

- Conduct audits for compliance and security purposes.

Here is a sample metadata:

// Example metadata

{

"tfy_log_request": "true", //Whether to add a log/trace for this request or now

"environment": "staging", // THe environment - dev, staging or prod?

"feature": "countdown-bot", //Which feature initiated the request?

}Log metadata

To log metadata, the implementation varies depending on the method you're using.

Using OpenAI SDK or Langchain SDK

If you're using the OpenAI SDK or Langchain SDK, simply pass the extra_headers key, as shown in the following example:

llm = ChatOpenAI(

...

...

extra_headers={

"X-TFY-METADATA": '{"tfy_log_request":"true"}',

}

)Using REST API, Stream API Client, or cURL

If you're using a REST API client, Stream API client, or cURL, include the X-TFY-METADATA header in your request:

import requests

try:

response = requests.post(

URL,

headers = {

...

"X-TFY-METADATA": '{"tfy_log_request":"true"}',

},

json = {

"messages": [

{"role": "system", "content": "You are an AI bot."},

{"role": "user", "content": "Enter your prompt here"},

],

"model": "test-ai-inference/llama-new",

"temperature": 0.7,

"max_tokens": 256,

...

]

}

)You can include multiple metadata keys with each request. Please note that all values must be strings, with a maximum length of 128 characters.

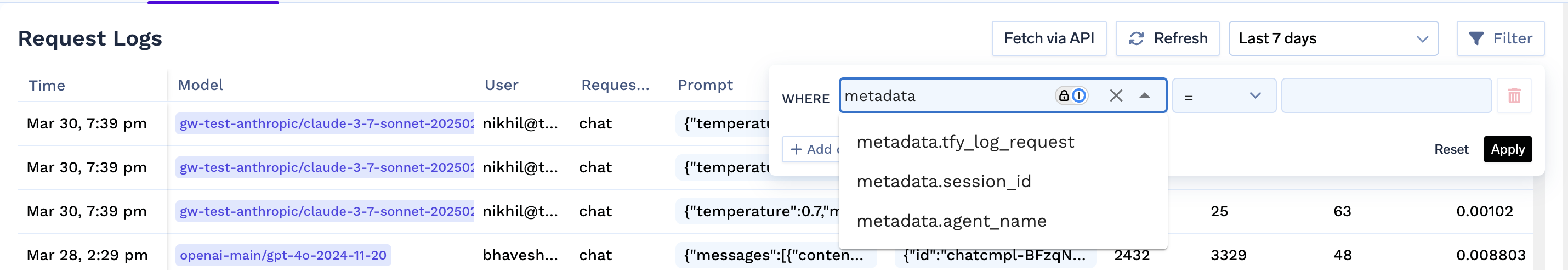

Filter logs and metrics

You can filter logs and metrics using metadata. Simply navigate to LLM Gateway, go to the Logs/Metrics tab, click on Filter, and apply the desired filters, as demonstrated in the screenshot below:

Rate limit

You can also rate limit your requests based on metadata. Say you want to limit all requests to gpt4 model from openai-main account for environment dev: to 1000 requests per day

name: ratelimiting-config

type: gateway-rate-limiting-config

# The rules are evaluated in order, and all matching rules are considered.

# If any one of them causes a rate limit, the corresponding ID will be returned.

rules:

#

- id: "openai-gpt4-dev-env"

when:

models: ["openai-main/gpt4"]

metadata:

env: dev

limit_to: 1000

unit: requests_per_dayLearn more about rate limits here

Load Balance

You can also load balance your requests based on metadata. Say you want to distribute traffic for llama model from bedrock for customer1 with 60% to azure-bedrock and 40% to aws-bedrock

name: loadbalancing-config

type: gateway-load-balancing-config

----

----

- id: "llama-bedrock-customer1"

when:

models: ["bedrock/llama3"]

metadata:

customer-id: customer1

load_balance_targets:

- target: "azure/bedrock-llama3"

weight: 60

- target: "aws/bedrock-llama3"

weight: 40Learn more about Load balancing here

Fallback

You can also add fallback to your requests based on metadata. Say you want to fallback to llama3 of azure, aws if bedrock/llama3 fails with 500 or 429 for customer1.

name: model-fallback-config

type: gateway-fallback-config

----

----

- id: "llama-bedrock-customer1-fallback"

when:

models: ["bedrock/llama3"]

metadata:

customer-id: customer1

response_status_codes: [500, 429]

fallback_models: ["azure/llama3", "aws/llama3"]Learn more about fallback here

Updated 23 days ago