Tracing in LangGraph

Tracing helps you understand what’s happening under the hood when an agent run is called. You get to understand the path, tools calls made, context used, latency taken when you run your agent using TrueFoundry’s tracing functionality.

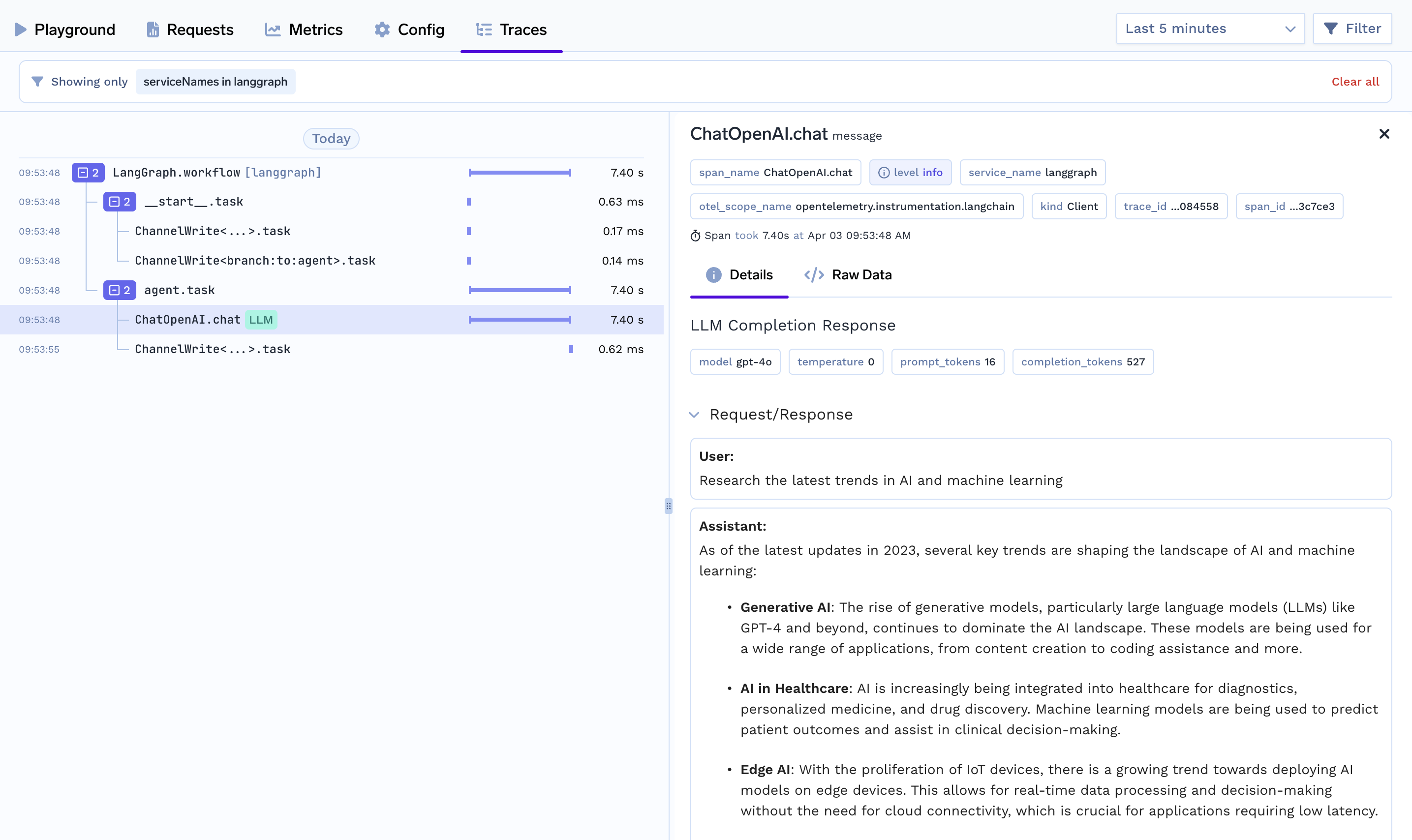

With TrueFoundry, you can easily monitor LangGraph by capturing traces. This data provides real-time insights into AI agent interactions, execution paths, and overall system performance. By integrating, you can instrument LangGraph workflows to capture spans and traces. These traces are then visualized in TrueFoundry, giving you a clear view of execution flows. Real-time observability allows you to analyze resource usage and performance trends, enabling you to optimize workflows. This integration helps fine-tune AI-driven automation, boosting both reliability and efficiency in LangGraph-based applications.

lnstall dependencies:

First, you need to install the following

pip install langgraph==0.3.22 traceloop-sdk==0.38.12Setup environment variables:

Add the necessary environment variables to enable tracing

OPENAI_API_KEY=sk-proj-*

TRACELOOP_BASE_URL=<<control-plane-url>>/api/otel

TRACELOOP_HEADERS="Authorization=Bearer <<api-key>>Generate API key from here

Demo LangGraph Agent

The following demo agent is a research agent which researches the latest trends to conduct detailed market research with keen attention to detail. For example, it can generate "A comprehensive report on AI and machine learning"

from dotenv import load_dotenv

from langchain_core.messages import HumanMessage

from langchain_openai import ChatOpenAI

from langgraph.graph import END, StateGraph, MessagesState

from traceloop.sdk import Traceloop

# Load environment variables from a .env file

load_dotenv()

# initialize the Traceloop SDK

Traceloop.init(app_name="langgraph")

model = ChatOpenAI(model="gpt-4o", temperature=0)

# Define the function that calls the model

def call_model(state: MessagesState):

messages = state["messages"]

response = model.invoke(messages)

# We return a list, because this will get added to the existing list

return {"messages": [response]}

# Define a new graph

workflow = StateGraph(MessagesState)

# Define the two nodes we will cycle between

workflow.add_node("agent", call_model)

# Set the entrypoint as `agent`

# This means that this node is the first one called

workflow.set_entry_point("agent")

app = workflow.compile()

# Use the Runnable

final_state = app.invoke(

{"messages": [HumanMessage(content="Research the latest trends in AI and machine learning")]},

)

print(final_state["messages"][-1].content)View Logged Trace on UI

Updated 19 days ago