AWS Bedrock

TrueFoundry offers a secure and efficient gateway to seamlessly integrate various Large Language Models (LLMs) into your applications, including models hosted on AWS Bedrock.

Adding Models

This section explains the steps to add AWS Bedrock models and configure the required access controls.

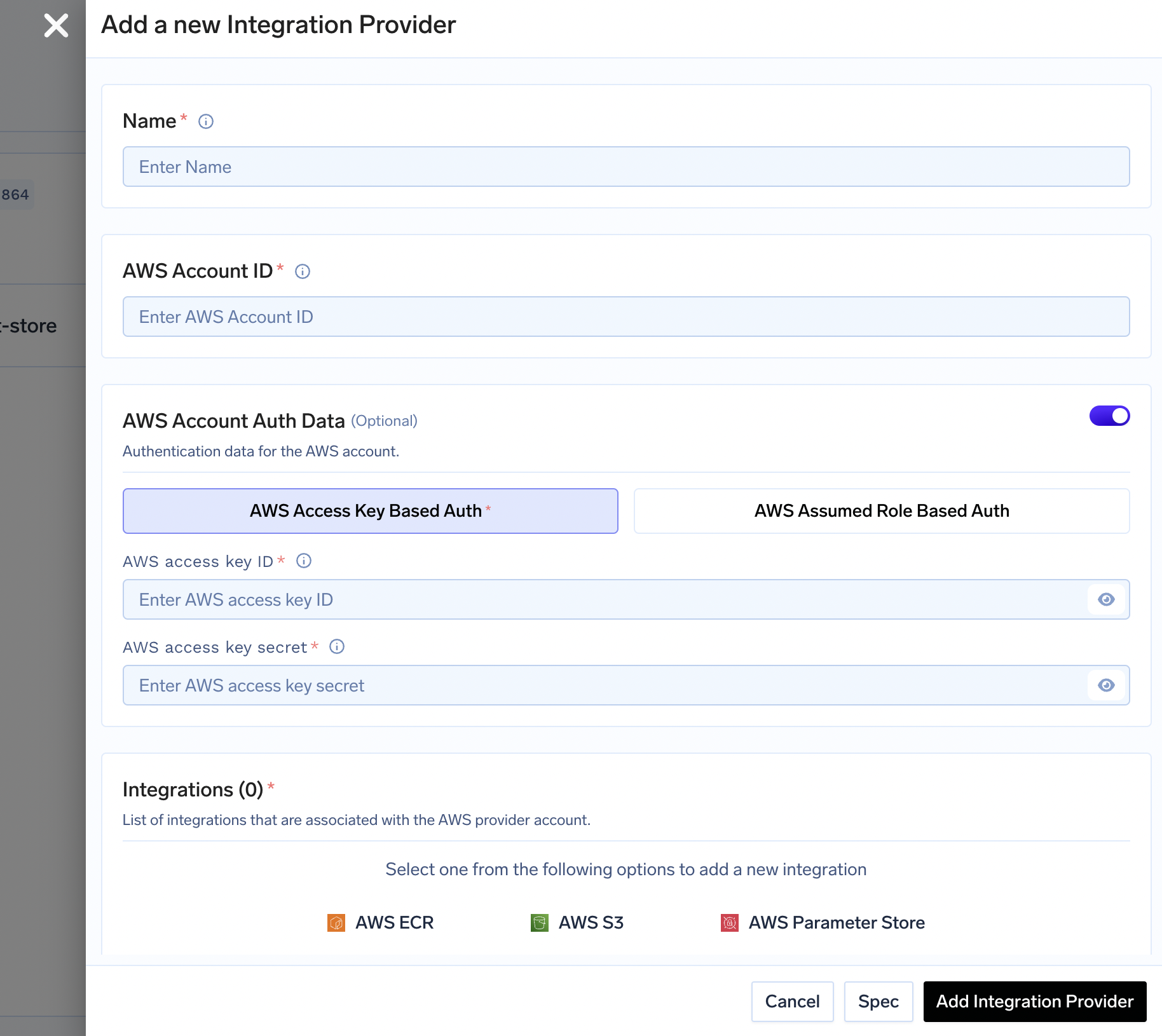

- From the TrueFoundry dashboard, navigate to

Integrations>Add Provider Integrationand selectAWS. - Complete the form with your AWS account details, including the authentication information (Access Key + Secret) or Assume Role ID.

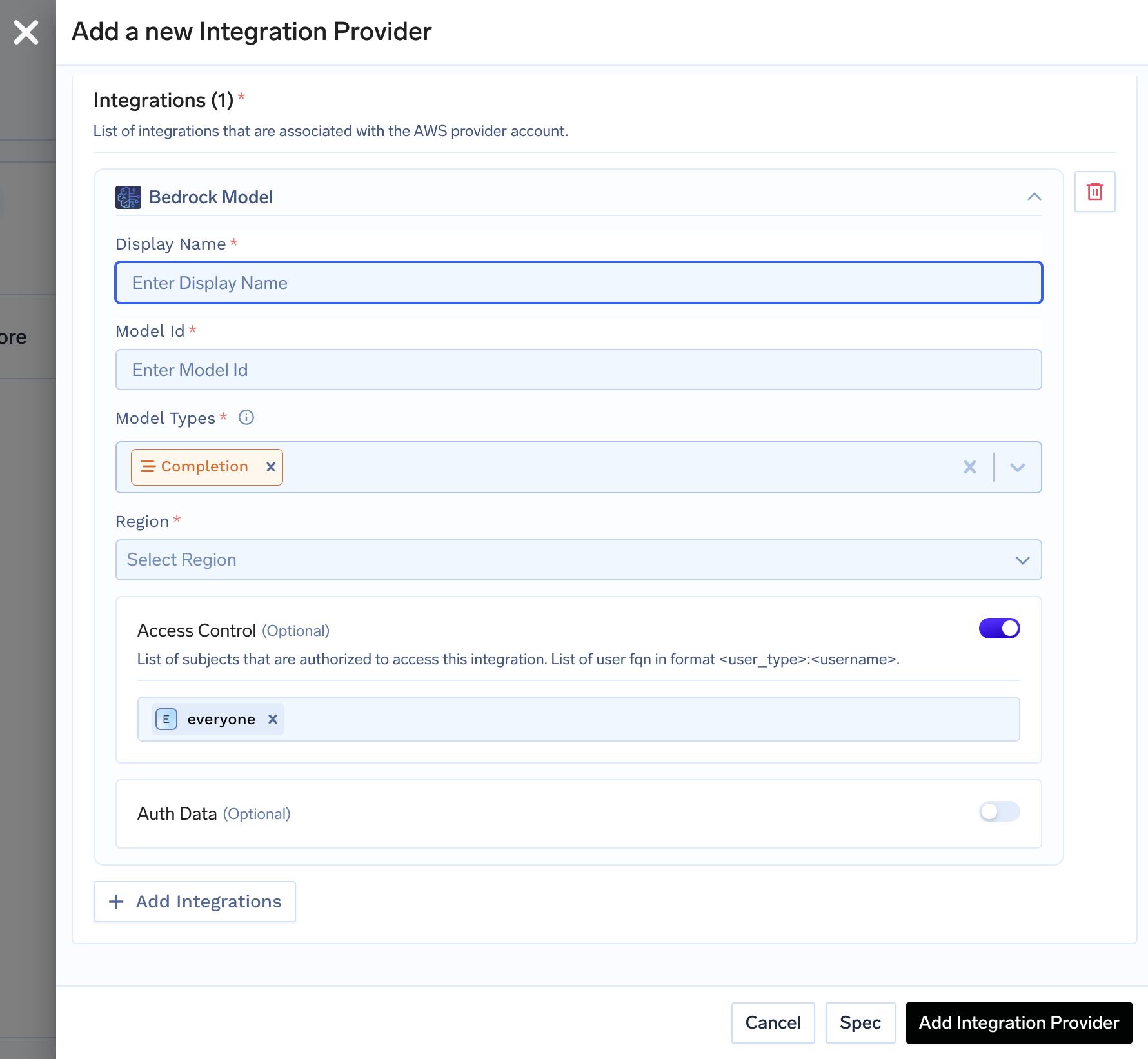

- Select the Bedrock Model option and provide the model ID and other required details to add one or more model integrations.

Inference

After adding the models, you can perform inference using an OpenAI-compatible API via the TrueFoundry LLM Gateway. For instance, you can directly utilize the OpenAI library as well.:

from openai import OpenAI

client = OpenAI(api_key="Enter your API Key here", base_url="https://llm-gateway.truefoundry.com/api/inference/openai")

stream = client.chat.completions.create(

messages = [

{"role": "system", "content": "You are an AI bot."},

{"role": "user", "content": "Enter your prompt here"},

],

model= "bedrock-provider/llama-70b",

)

Supported Models

A list of models supported by AWS Bedrock, along with their corresponding model IDs, can be found here: View Full List

The TrueFoundry LLM Gateway supports all text and image models in Bedrock. We are also working on adding support for additional modalities, including speech, in the near future.

Extra Parameters

Internally, the TrueFoundry LLM Gateway utilizes the Bedrock Converse API for chat completion.

To pass additional input fields or parameters, such as top_k, frequency_penalty, and others specific to a model, include them using this key:

"additionalModelRequestFields": {

"frequency_penalty": 0.5

}Cross-Region Inference

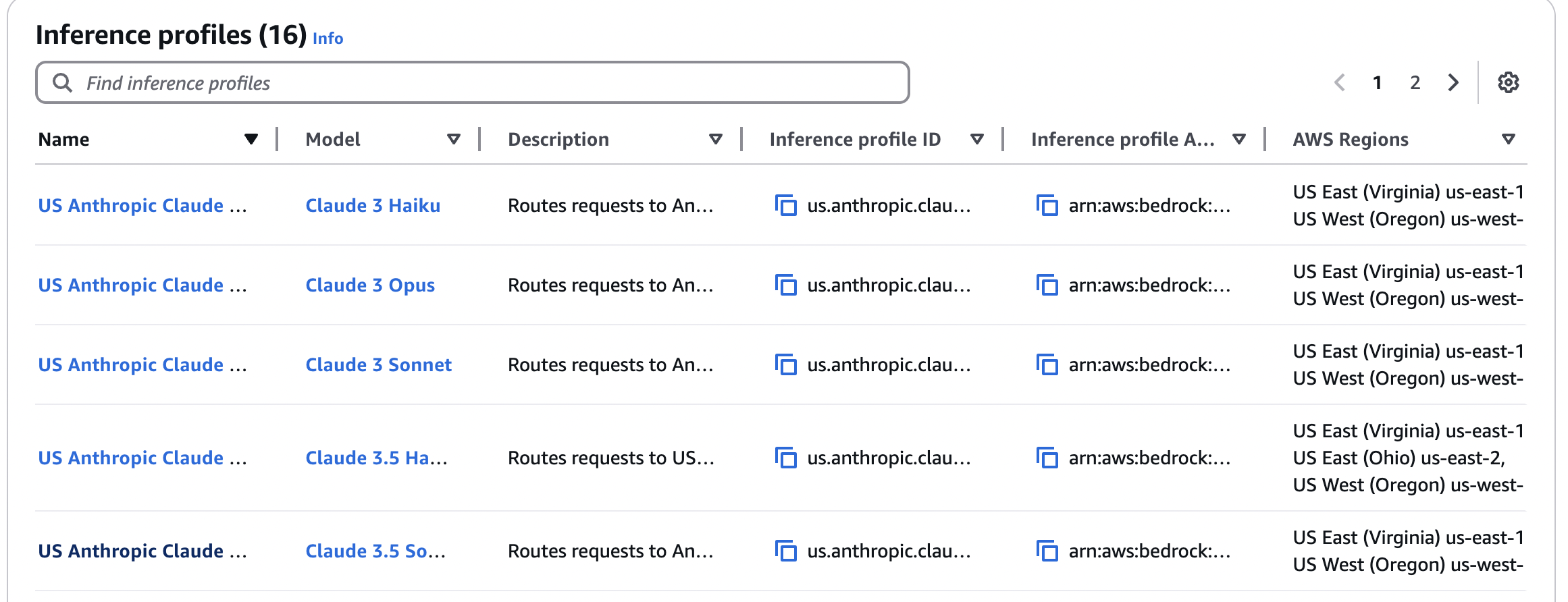

To manage traffic during on-demand inferencing by utilising compute across regions, you can use AWS Bedrock Cross-Region Inference. While setting model ID in TrueFoundry, use the Inference Profile ID instead of the model ID.

You can more information about cross-region inferencing here.

Use Inference Profile ID as model ID while adding model to TFY LLM Gateway

Authentication Methods

Using AWS Access Key and Secret

- Create an IAM user (or choose an existing IAM user) following these steps.

- Add required permission for this user. The following policy grants permission to invoke all model

-

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Sid": "InvokeAllModels", "Action": [ "bedrock:InvokeModel", "bedrock:InvokeModelWithResponseStream" ], "Resource": [ "arn:aws:bedrock:us-east-1::foundation-model/*" ] } ] }

-

- Create an access key for this user as per this doc.

- Use this access key and secret while adding the provider account to authenticate requests to the Bedrock model.

Using Assumed Role

- You can also directly specify a role that can be assumed by the service account attached to the pods running LLM Gateway.

- Read more about how assumed roles work here.

Using Bedrock Guardrails

- Create a Guardrail in AWS. More information at this link - https://aws.amazon.com/bedrock/guardrails

- Copy the Guardrails ID and the version number

- While calling a AWS bedrock model through TFY LLM Gateway, pass the following object along with it:

"guardrailConfig": { "guardrailIdentifier": "your-guardrail-id", "guardrailVersion": "1" } - This should ensure the response will have guardrails enforced. Consider this input where the guardrail is configured to censor PII like name, email etc.:

{ "model": "internal-bedrock/claude-3", "messages": [ { "role": "user", "content": "What are some ideas for email for Elon Musk?" } ], "guardrailConfig": { "guardrailIdentifier": "xyz-123-768", "guardrailVersion": "1" } } - Sample output:

{ "id": "1741339101780", "object": "chat.completion", "created": 1741339101, "model": "", "provider": "aws", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "Here are some ideas for email addresses for {NAME}:\n\n1. {EMAIL}\n2. {EMAIL}\n3. {EMAIL}\n4. {EMAIL}\n5. {EMAIL}\n6. {EMAIL} (or any relevant year)\n7. {EMAIL}\n8. {EMAIL}\n9. {EMAIL}\n10. {EMAIL}\n11. {EMAIL}\n12. {EMAIL}\n13. {EMAIL}\n14. {EMAIL}\n15. {EMAIL}\n\nWhen creating an email address, consider the following tips:\n\n1. Keep it professional if it's for work purposes.\n2. Make it easy to spell and remember.\n3. Avoid using numbers or special characters unless necessary.\n4. Consider using a combination of first name, last name, or initials.\n5. You can use different email addresses for personal and professional purposes.\n\nRemember to replace \"example.com\" with the actual domain you'll be using for your email address." }, "finish_reason": "guardrail_intervened" } ], "usage": { "prompt_tokens": 25, "completion_tokens": 320, "total_tokens": 345 } } - If you're using a library like Langchain, you might have to pass the extra param in a parameter like

extra_bodyas required by the library. For example, refer this Langchain OpenAI class doc.

Updated 17 days ago