LLM Deployment And Finetuning FAQs

LLM Deployment

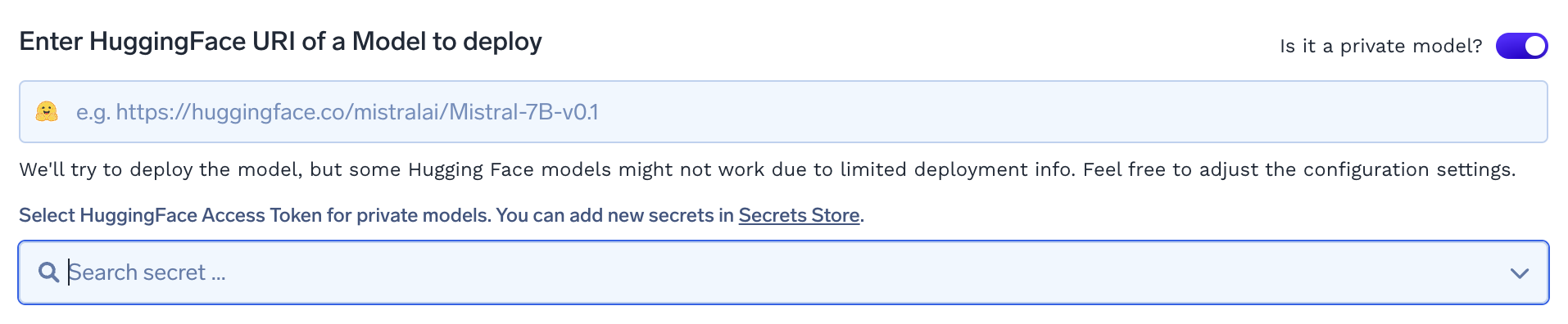

I have a private model on HuggingFace Hub, how do I deploy it?

While entering your Hugging Face model URL, turn on the toggle for 'private model'. The Hugging Face access token can be fetched from the secret store. Steps to set up secret store here

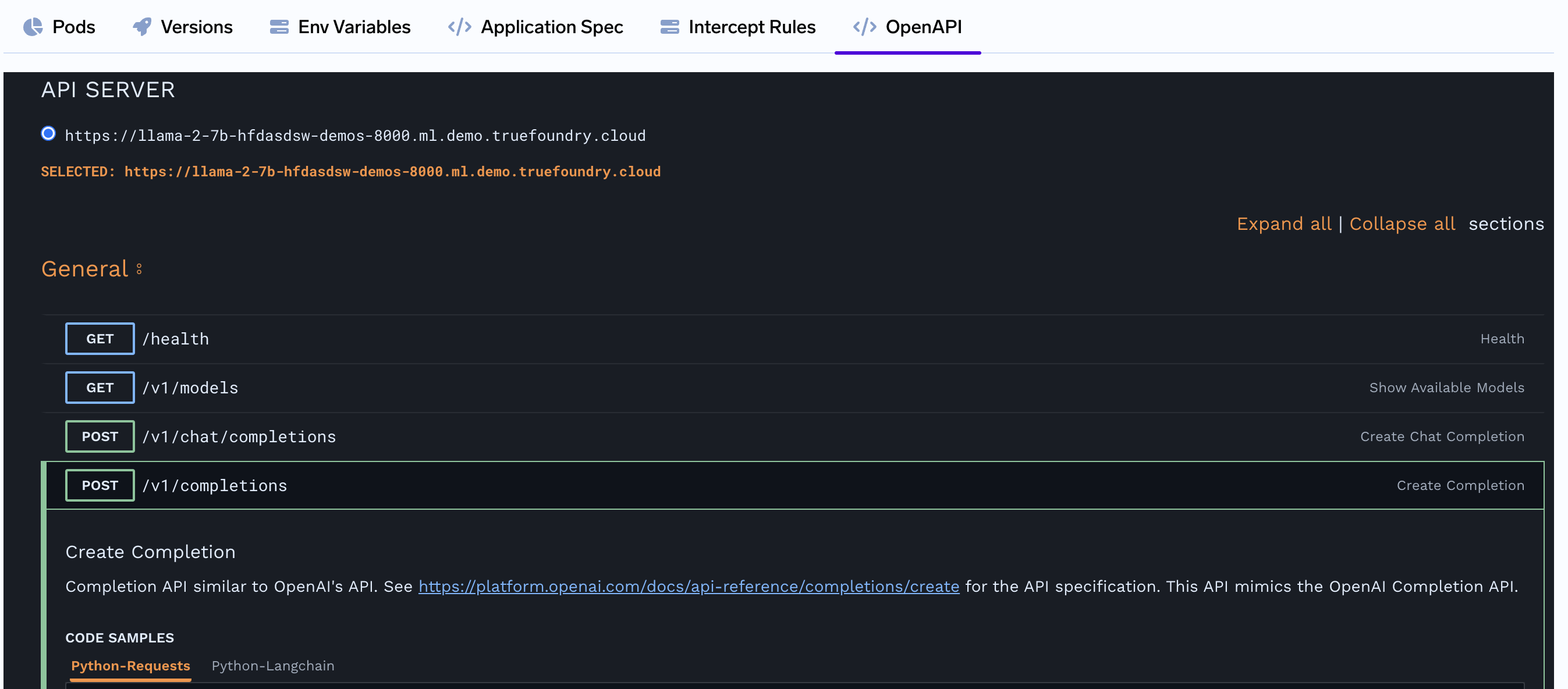

How do I access the endpoint of a successfully deployed LLM?

Navigate to the OpenAPI tab on the Service details page to view the API details of the LLM, including pre-filled requests and responses. You can send requests either through the UI or programmatically.

OpenAPI tab is throwing an error even when the model is successfully deployed.

The OpenAPI tab might have failed because DNS mapping is not configured for the tenant on TrueFoundry. Once DNS is configured, the OpenAPI tab should work correctly.

How can I deploy a LLM that I have logged in TrueFoundry's Model registry?

Please refer to the detailed guide below -

LLM finetuning

Why are the pre-requisites to run a fine-tuning workflow?

- Workspace with access to MLRepo

- Finetuning data stored in .jsnol format in local, cloud storage or truefoundry artifact.

- Cluster with GPU nodes

I am having trouble opening ipynb files in my notebook instance. I am getting file loading error. How do I resolve it?

As a first step, you should look at the logs for the corresponding notebook by going to {Truefoundry_access_URL}/deployments?tab=notebooks. Many issues arise from the notebook running out of storage, which will be evident from logs stating "No Space left in disk." To resolve this, edit the notebook and increase the "Home Directory Size" to a higher value. This issue often occurs when fine-tuning or accessing large models that have been downloaded multiple times in the notebook. A recommended practice is to delete older models that are not being used to save space and reduce costs. If you encounter an error that you cannot debug, please reach out to the TrueFoundry team for assistance.

When do I use launch fine-tuning as a Notebook and when to launch it as a job?

If you are running experiments involving fine-tuning and need to shuffle between models and datasets, it is generally recommended to use notebooks. However, for fine-tuning over larger datasets, it is advisable to use Jobs.

In the default configuration, it shows spot as the base selection. Is it okay and advisable to run fine-tuning on spot Machines?

The major drawback of Spot machines is that they can be terminated by a cloud provider at any time, requiring you to restart your work. However, if you are saving checkpoints in your fine-tuning code, using Spot instances is a viable option since they are 60-90% cheaper depending on the cloud provider. Our fine-tuning setup, by default, enables checkpoint saving. If your task is not extremely time-sensitive, we recommend using Spot instances for fine-tuning to reduce costs. Even if the Spot instances are terminated, you can resume fine-tuning from the last saved checkpoint. Here is how you can restart from a saved checkpoint using the TrueFoundry Platform.

Updated about 1 month ago