Caching Huggingface models using volumes

Reduce startup times across pod restarts by caching models

To enhance startup times and prevent model download delays in services during pod restarts, while utilising Hugging Face models from the Huggingface Transformers library, follow these steps on TrueFoundry:

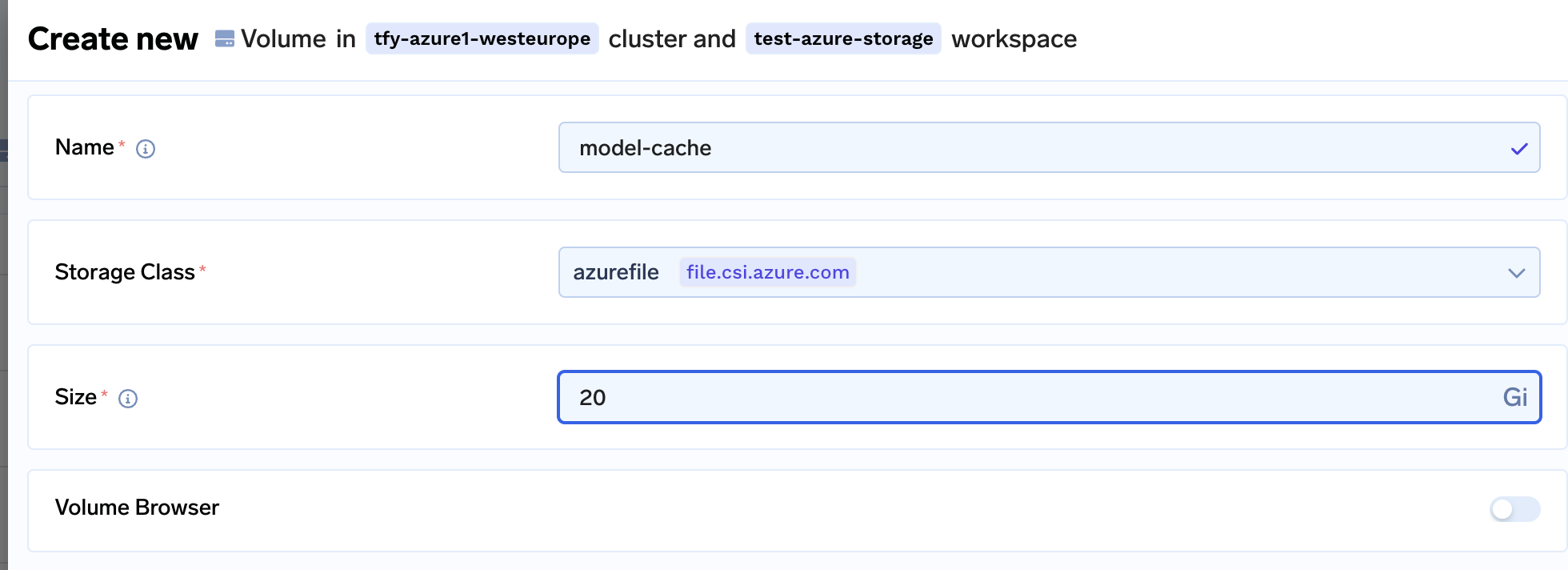

- Create a Persistent Volume: Set up a persistent volume with a size matching the size of the models and other downloaded artefacts. To do this, go to New Deployments > Volume and enter the details. These are the recommended Storage Class per cloud provider:

- AWS:

efs-sc - Azure:

azurefile - GCP:

standard-rwoorpremium-rwo

- AWS:

Creating a persistent volume on Azure cloud

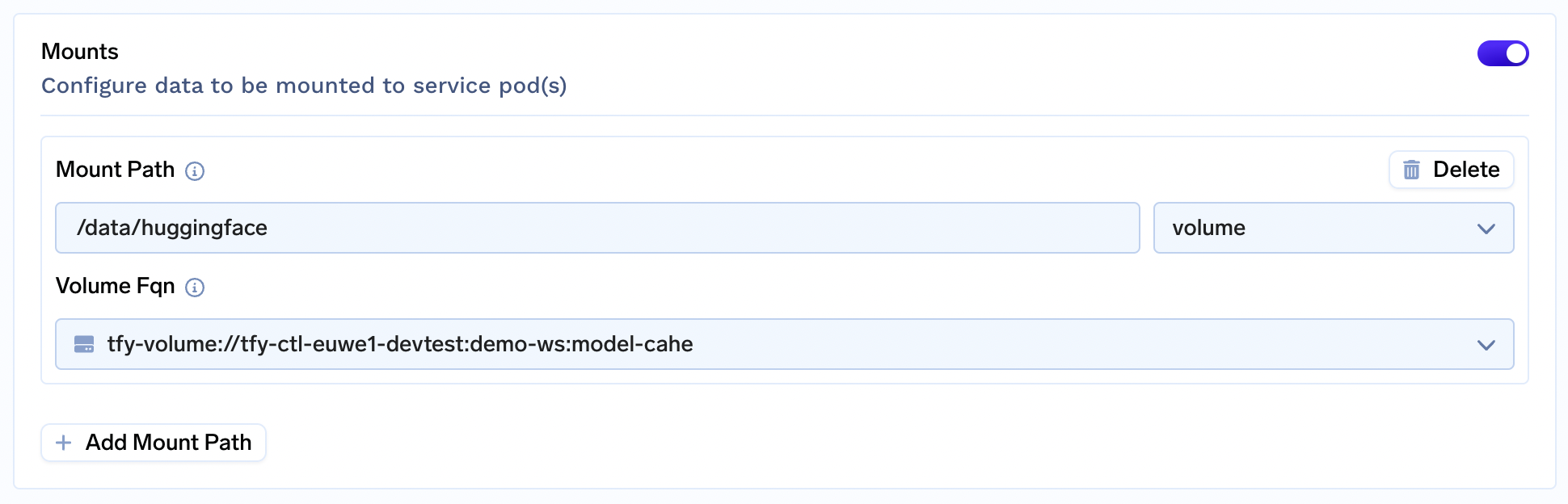

- Mount the Volume: While creating/editing your TrueFoundry Service, make sure to mount the created persistent volume at a preferred path, such as

/data/huggingface.

Attaching volume to service deployment

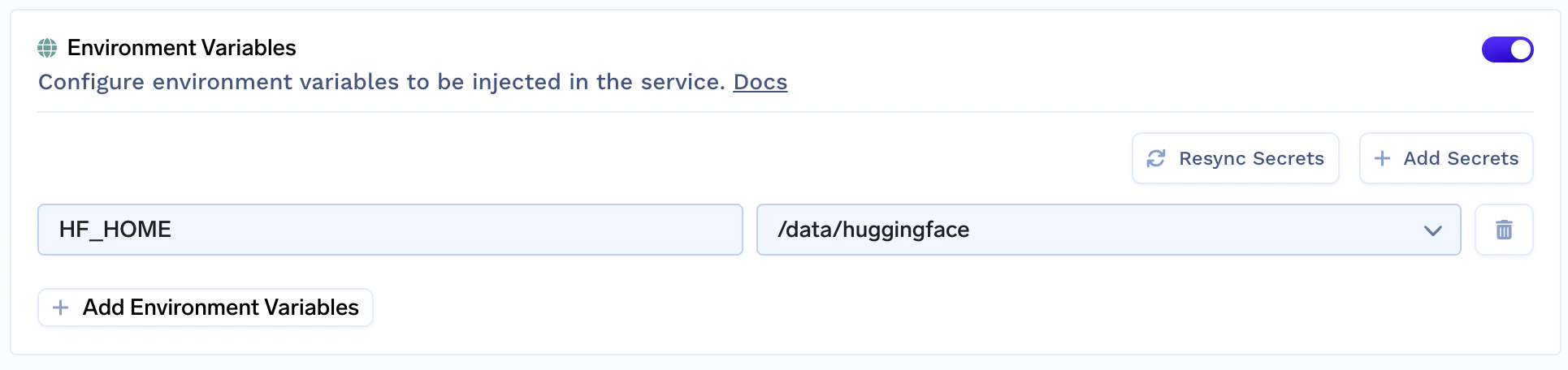

- Set

HF_HOME: As you create the service, include an environment variable namedHF_HOMEand assign it the same path as the mounted persistent volume. This ensures that the any artifact downloaded from Huggingface will be stored in this folder.

Setting HF_HOME variable

- Deploy the Service: Proceed to deploy your service. With the model cached in the persistent volume, subsequent pod restarts won't require re-downloading the model, resulting in reduced startup times.

Updated 9 months ago