Deploy Tensorflow Serving with gRPC

Tensorflow Serving gRPC Service

The complete example can be found at Tensorflow Serving gRPC - Truefoundry Examples

We will deploy a pre-trained MobileNet model from Tensorflow Hub

Project structure

Our final project structure will look like this

.

├── Dockerfile

├── client.py

├── german-shepherd.jpeg

├── deploy.py

└── models

├── imagenet_mobilenet_v3_small_075_224_classification_5

│ └── 1

│ ├── assets

│ ├── saved_model.pb

│ └── variables

│ ├── variables.data-00000-of-00001

│ └── variables.index

└── models.config

Step 1: Download the model

mkdir -p models/imagenet_mobilenet_v3_small_075_224_classification_5/1

wget -O mobilenet_v3_small_075_224.tar.gz https://tfhub.dev/google/imagenet/mobilenet_v3_small_075_224/classification/5?tf-hub-format=compressed

tar -C models/imagenet_mobilenet_v3_small_075_224_classification_5/1/ -zxvf mobilenet_v3_small_075_224.tar.gz

rm mobilenet_v3_small_075_224.tar.gz

Create a models.config in models/

models/models.config

models/models.configmodel_config_list {

config {

name: 'mobilenet-v3-small'

base_path: '/mnt/models/imagenet_mobilenet_v3_small_075_224_classification_5'

model_platform: 'tensorflow'

}

}

Step 2: Dockerfile for Tensorflow serving

Create a Dockerfile with the following contents

Dockerfile

DockerfileFROM tensorflow/serving:2.11.0

COPY models/ /mnt/models

COPY start_server.sh /app/start_server.sh

ENTRYPOINT ["/usr/bin/tensorflow_model_server", "--model_config_file=/mnt/models/models.config", "--port=9000", "--grpc_max_threads=8", "--enable_batching=true", "--enable_model_warmup=true"]

Step 3: Deploy it using Truefoundry

We can now deploy our service using Python SDK or YAML spec. Make sure you have servicefoundry installed and setup

Via Python SDK

Create a deploy.py

deploy.py

deploy.pyimport argparse

import json

import logging

from servicefoundry import Service, Build, DockerFileBuild, Resources, Port, BasicAuthCreds, AppProtocol

logging.basicConfig(level=logging.INFO, format=logging.BASIC_FORMAT)

parser = argparse.ArgumentParser()

parser.add_argument(

"--workspace_fqn",

type=str,

required=True,

help="FQN of the workspace to deploy to",

)

args = parser.parse_args()

service = Service(

name="mobilenet-v3-small-tf",

image=Build(build_spec=DockerFileBuild()),

resources=Resources(

cpu_request=1,

cpu_limit=1,

memory_request=500,

memory_limit=500,

),

ports=[

Port(

port=9000,

app_protocol=AppProtocol.grpc,

host="<Provide a host value based on your configured domain>"

# Note: Your cluster should allow subdomain based routing (*.yoursite.com) for gRPC to work correctly via public internet.

# A host matching the wildcard base domain for the cluster can be explicitly configured by passing in `host`

),

],

)

service.deploy(workspace_fqn=args.workspace_fqn)

Now we can deploy by simply running this file with Workspace FQN

python deploy.py --workspace_fqn <YOUR WORKSPACE FQN HERE>

Via YAML Spec

Create a deploy.yaml with the following spec:

deploy.yaml

deploy.yamlname: mobilenet-v3-small-tf

type: service

image:

type: build

build_spec:

type: dockerfile

dockerfile_path: './Dockerfile'

build_context_path: './'

build_source:

type: local

ports:

- port: 9000

expose: true

protocol: TCP

app_protocol: grpc

host: <Provide a host value based on your configured domain>

replicas: 1

resources:

cpu_limit: 0.5

cpu_request: 0.2

memory_limit: 500

memory_request: 500

Now we can deploy by simply calling sfy deploy with Workspace FQN

sfy deploy --file deploy.yaml --workspace-fqn <YOUR WORKSPACE FQN HERE>

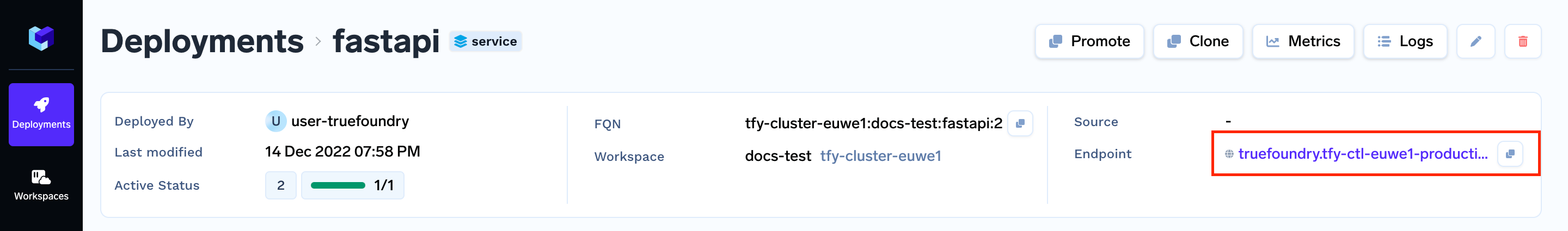

Step 4: Get the Endpoint URL

Once deployed, we can find the Service in the Deployments section of the platform and get the Endpoint

Step 5: Interacting with our Service

We can now interact with our service by passing the Endpoint from Step 6.

- Install Tensorflow Serving Client

pip install numpy==1.22.4 tensorflow==2.11.0 tensorflow_serving_api==2.11.0

- Download this image and save it as

german-shepherd.jpeg

german-shepherd.jpeg

- Finally, create a

client.pythat will send this image for prediction

client.py

client.pyimport argparse

from google.protobuf.json_format import MessageToDict

import grpc

from tensorflow_serving.apis import predict_pb2, get_model_metadata_pb2, prediction_service_pb2_grpc

import tensorflow as tf

import numpy as np

parser = argparse.ArgumentParser()

parser.add_argument(

"--host",

type=str,

required=True,

help="Host of the deployed tf serving service",

)

args = parser.parse_args()

GRPC_MAX_RECEIVE_MESSAGE_LENGTH = 4096 * 4096 * 3 # Max LENGTH the GRPC should handle

channel = grpc.secure_channel(

args.host,

credentials=grpc.ssl_channel_credentials(),

)

stub = prediction_service_pb2_grpc.PredictionServiceStub(channel)

# Predict example

image = tf.io.read_file("german-shepherd.jpeg")

image = tf.io.decode_jpeg(image)

image = tf.image.convert_image_dtype(image, dtype=tf.int8)

image = tf.expand_dims(image, 0)

grpc_request = predict_pb2.PredictRequest()

grpc_request.model_spec.name = 'mobilenet-v3-small'

grpc_request.model_spec.signature_name = 'serving_default'

grpc_request.inputs['inputs'].CopyFrom(tf.make_tensor_proto(image, dtype=tf.float32))

predictions = stub.Predict(grpc_request, 10.0)

outputs_tensor_proto = predictions.outputs['logits']

shape = tf.TensorShape(outputs_tensor_proto.tensor_shape)

outputs = np.array(outputs_tensor_proto.float_val).reshape(shape.as_list())

outputs = tf.nn.softmax(outputs, axis=1)

top = tf.argmax(outputs, axis=1).numpy()[0]

print("Predicted Class:", top, outputs[0][top])

We can invoke our rpc by running the file

python client.py --host <YOUR ENDPOINT HERE>

E.g. If Endpoint were https://mobilenet-v3-small-tf-9000.abc.xyz.example.com

python client.py --host mobilenet-v3-small-tf-9000.abc.xyz.example.com

Note: Do not pass

https://in the--hostoption

This should get us output like

Predicted Class: 238

Updated 5 months ago